简化Transformer模型训练

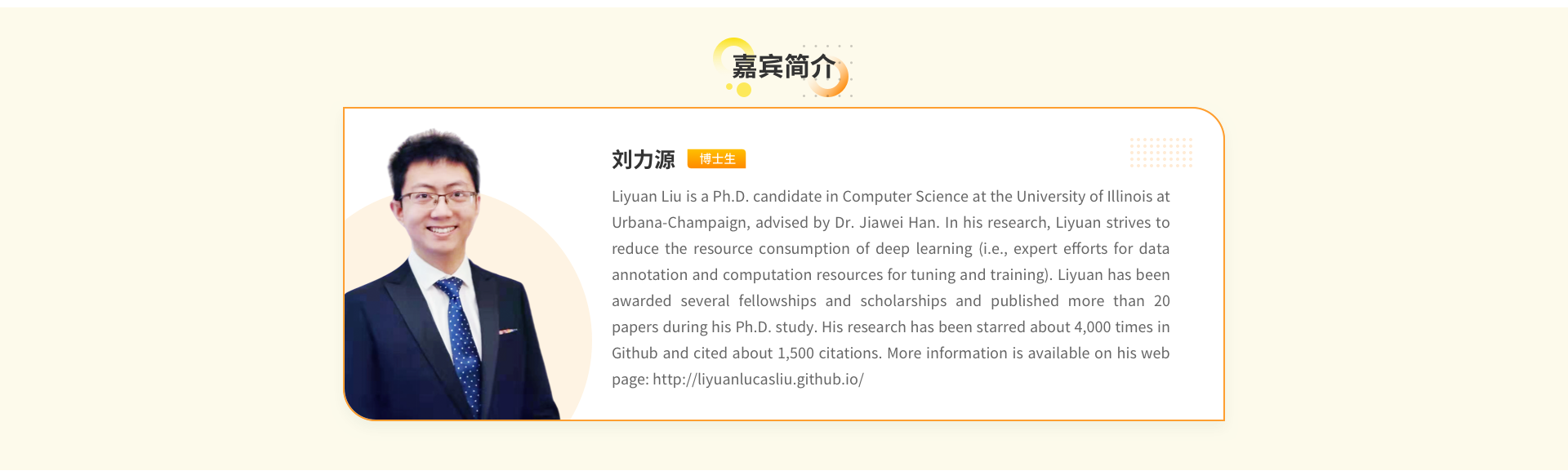

Transformer have led to a series of breakthroughs in various deep learning tasks. However, with great power comes great challenges--training Transformers requires non-trivial efforts regarding model configurations, optimizer configurations, and setting other hyper-parameters. Given the inherent resource limitation of real-world tasks, those additional efforts have hindered various research. In this talk, I will present our study towards effort-light Transformer training. First, I will introduce our analyses on the underlying mechanism of learning rate warmup. I will then discuss our analyses on what complicates Transformer training and introduce our study on how to make Transformer easy to train. In the end, I will demonstrate that our research also opens new possibilities to move beyond current neural architecture designing and advance the state of the art. Finally, I will give a brief outlook on future directions.